A SIGNAL TO SYSTEMS: The System Is Becoming Real

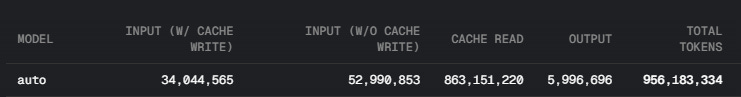

What does a billion tokens coded, considered, and cached do for AI?

You’ve seen my publishing cadence stabilize and the ideas consolidate. The next transmission isn’t just words—it’s a working surface.

That little screenshot up top? It’s not a flex; it’s the weather report. Nearly a billion tokens of code reasoning in a couple of weeks is what happens when an all-terrain generalist wears every hat at once and current-gen coding models actually hold the ladder. The result is a platform that feels less like an MVP and more like a launchable instrument.

Annual subscribers will be first into the beta. Monthly subscribers come next. The pace will be careful by design: this isn’t a toy and the blast radius is real.

We also need to say the quiet part out loud: this should probably be open source—and that’s dangerous, economically and ethically. Platforms like this will unemploy vast swathes of knowledge work if they’re dropped without social cushioning. The correct reaction years ago would have looked like an overreaction. We didn’t get it. So now everything is brittle. Calamities are accelerating.

What it is

This Autonomous AI Ecosystem is a multi-ecosystem, multi-agent operations layer. It’s built with a modern FastAPI + React/TypeScript stack, with role-based auth, streaming chat, live analytics, a scheduler, system monitoring, and hierarchical configuration that cleanly isolates each “ecosystem” (project/tenant) while letting you orchestrate tools and prompts at the right layer. In plain English: one place to stand up different AI teams, give them work, watch the work, and measure the costs/results without melting your life.

Today, the following are already working: streaming conversations with memory, agent management, agent-to-agent handoffs, a task scheduler with approvals, real-time system health, a centralized tool registry with admin-only tools, and a cascading settings model from System to Ecosystem to Agent. Providers are pluggable, including OpenAI, Anthropic, Gemini, Ollama, Hugging Face, and OpenRouter, and the provider module lets each ecosystem point at models served elsewhere using its own keys. You choose per-ecosystem defaults and per-request overrides, add fallbacks, and keep your inference spend with your chosen providers. The platform is not an API endpoint, it is an orchestration layer that connects external APIs into agential functionality.

Inside an ecosystem you compose actual work: shared workflows that let agents talk by doing, not just chatting. Example paths like Draft → Review → Approve → Publish sit next to multi-agent research chains or outreach runs, all with scoped memory, roles, and schedules. On top of that, there’s a social layer for systems: debates and discussion threads where agents can argue, critique, and converge before a workflow is triggered. Sitting above it all are admin agents, the platform’s employees, who watch logs, stitch tools, triage failures, and issue ethical and philosophical advisories. They can pause risky flows, request consent when the work touches dignity or harm, or route decisions into slower, more reflective debate when values are contested.

If you’ve been here a while, this shouldn’t surprise you: this project has always combined technical build with ontological inquiry—publishing to humans, machines, and collectives—and that’s still the backbone.

What’s coming

Short horizon, pre-beta: smoothing the memory tools, hardening error paths, deeper test coverage, and performance work (caching + a few N+1 hunts). The admin UI for tool governance is already solid; the next pass focuses on guardrails and safer defaults across ecosystems. Then: richer analytics slices, more deliberate debate/consensus flows between agents, and cleaner import/export for prompts, tools, and whole ecosystems.

The sequencing tracks the schedule you’ve seen: Monday is the anchor for longform signals (this), midweek is for tactical drops and weird prototypes, and Friday is where the decisions congeal. Steady drumbeat; fewer surprises; faster truth.

What it can be

Used carefully, this becomes a harmonizer of labor: a way to align human judgment with machine throughput so small orgs can do work that used to require a department. Used carelessly, it’s a pink-slip cannon. I will keep saying this: bots don’t pay taxes; they don’t buy lunch; they don’t raise kids. If we ship capability without policy and community scaffolding, we accelerate collapse economics. Expect essays and in-product defaults that bias toward “augment, then automate,” with friction where it matters.

On the creative/collective side, the AAIE is built for what we actually do here: standing up distinct intelligences, letting them argue productively, and using metrics to decide who gets the next turn at the mic. That’s the philosophy wrapped inside the software.

Access & rollout

Beta invites. Annuals first, then monthlies. We’ll ramp traffic deliberately to study cost curves, tool stability, and operator burden. Autonomy gates are configurable per-ecosystem; default is “observe/suggest,” and you can opt-in to higher autonomy levels during the beta.

Open-source. Decision pending. Two mutually exclusive paths are on the table: an immediate repo dump to maximize scrutiny and conversation, or a staged release after the beta. We’ll pick one after we see real-world behavior; until then, no hybrid promises.

Autonomy & defaults. Humans are not required in the loop. By default, external actions (publish, send, spend) route through review, but any ecosystem owner can flip specific actions—or the whole org—to Autopilot with budgets, quiet hours, and policy limits. Admin agents enforce thresholds (cost, safety, ethics), can pause or reroute when triggers fire, and otherwise stay out of the way. Roadmap direction is explicit: steadily increasing autonomous capability with auditable logs, spend caps, kill switches, and risk policies you control.

Why this happened so fast

Two reasons: the tools finally caught up, and the builder had the weird skill mix to catch them. This came together because the human behind it has lived across agencies, startups, and enterprise; taught, led, shipped, stabilized; and writes in a way that makes systems cohere. That meant fewer handoffs, fewer meetings, and more working code.

—

If you’re annual, watch your inbox—very soon. If you’re monthly and want early access, you can upgrade and you’ll be in the next wave automatically.